Harvey Risch was the guest on March 18, 2024 at the IPAK-EDU Director’s Science Webinar, “A Conversation on Evidence-based Medicine”.

This is Part 1, you can find Part 2 here.

Check the Short Cuts section on the home page for the full clip archive.

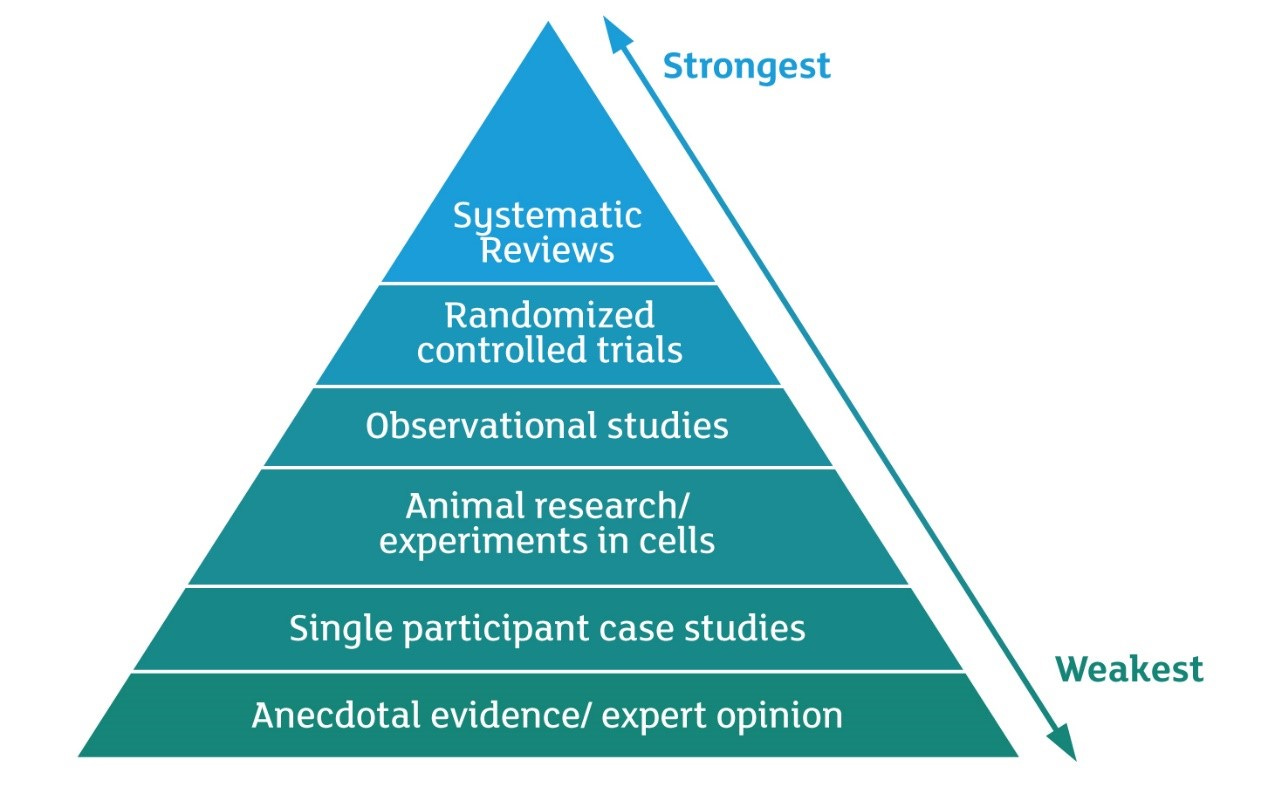

In all likelihood, you’ve heard of The Evidence Pyramid.

It is often referred to as establishing a preferred hierarchy of evidence in experimental research, especially with regard to medical research.

The origins of the pyramid come from this seminal paper in 1995, entitled, Users' Guides to the Medical Literature — IX. A Method for Grading Health Care Recommendations. The principal authors were Gordon Guyatt and David Sackett. Sackett is revered by many as the ‘father’ of Evidence-Based Medicine [EBM].

In the Abstract, Guyatt and Sackett wrote:

Summarizing the literature to adduce recommendations for clinical practice is an important part of the process. Recently, the health sciences community has reduced the bias and imprecision of traditional literature summaries and their associated recommendations through the development of rigorous criteria for both literature overviews and practice guidelines. Even when recommendations come from such rigorous approaches, however, it is important to differentiate between those based on weak vs strong evidence. Recommendations based on inadequate evidence often require reversal when sufficient data become available, while timely implementation of recommendations based on strong evidence can save lives. In this article, we suggest an approach to classifying strength of recommendations.

The structure proposed has been widely used and referenced. It has also been adjusted and codified in several forms, with various institutions, research groups, and governmental task forces putting forth versions of an evidence grading system built on, or derived from Guyatt and Sackett.

Here’s one fairly common version of the pyramid, in graphical form.

And below is another infographic format describing the same hierarchy in a top-down structure. This is essentially a re-packaging of the same structure.

This hierarchical model is a broadly-perceived and widely-held ‘truth’, and often cited uncritically.

Are there problems with this model?

Harvey Risch thinks so.

Weighing evidence is a subjective process

Note: referenced in the discussion is the work of Austin Bradford Hill, who wrote in 1965, The Environment and Disease: Association or Causation? This outlined what has become more widely known as the Bradford Hill Criteria, which are nine principles applicable to epidemiological evidence and causal relationships. If you’re not familiar, the embedded links above should help.

A commonly held belief (that Risch disagrees with) holds that randomized trials are unambiguously ‘better’ than non-randomized studies. This notion is clearly codified in the Evidence Pyramid; however, while it can be desirable in theory, the devil is in the details.

The basic idea is that randomization can be employed to balance the potential influence of confounding factors on a given association. But randomization doesn’t always cure confounding. One example of this occurs when outcome events measured are small.

Small numbers don’t cut it, probability-wise.

Here’s a simple example. You have a randomized trial consisting of 10,000 individuals in a treatment arm, and another 10,000 in a placebo arm. Your end point is whether or not someone shows particular symptoms of a disease. You record 2 participants with symptoms in the treatment arm and 5 participants in the placebo arm with symptoms. One might conclude that the results indicate that the treatment is working since more placebo participants recorded symptoms than the treatment group.

But this wouldn’t hold because the outcome numbers are much too small.

In probablility theory, a mathematical concept called the Law of Large Numbers comes into play. The Law of Large Numbers holds that an average of results from a large number of independent random samples will converge on an expected value. You can think of this in the frame of a roulette game. You might win or lose on a single spin of the wheel, but over a large number of spins, wins and losses are expected to converge to a predictable distribution. It is vital to note that this only applies when a sufficiently large number of observations are considered.

So in our example, even though each participant cohort is relatively large (10,000 persons), the outcome numbers are much too small for randomization to play any role in accounting for potential confounders.

Watch this clip as Dr. Risch fleshes this out.

Weighing the quality of Evidence

The Evidence Pyramid, as a theoretical structure, is also essentially blind to significant forces at play in real life: in practice, research and publication are human activities, and therefore, vulnerable to many corrupting influences.

In Dr. Risch’s words:

“…there’s all of this kind of chicanery that goes on in full public view…”

Risch, in his reserved manner, is emphatic: he doesn’t subscribe to the Pyramid at all.

He believes we must weigh each piece of evidence and assess its value. Thus, the value of a Systematic Review or Meta-Analysis ought to be seen as dependent upon the actual quality of the work, not its position on the Evidence Pyramid.

Stepping back, it might be important to recognize that structures like the Evidence Pyramid are just diagramatic models; that is, a kind of short cut, but not necessarily leading to critical understanding.

It’s bit like driving to a new location and using your phone to map and plan your route. It can function well sometimes, but as anyone who has had Google Maps lead you astray is probably painfully aware, over-reliance and blind obedience to an algorithm can be a problem.

There is no replacing careful reading, study, discussion, and consideration.

#questioneverything

Information wants to be free—and over 90% of the content here is accessible to anyone. But everything takes care and time. If you like what you see, and you’re willing and able, consider leaving a tip. Every little bit helps. Thank you!

Subscribers to the IPAK-EDU Director’s Science Webinar get full access to webinar recordings, including the full session with Harvey Risch.

Your support of the webinar and IPAK-EDU makes this possible!

That pyramid is funny. They think actual DIRECT EVIDENCE is the weakest form of evidence;-)

At the top is basically just "authority." Can you imagine if this was how criminal murder trials were conducted? The bloody knife with his fingerprints and a dozen direct eyewitnesses all saying they saw him stab the victim, would be tossed out as "weak" evidence;-)

I am happy to summarize. Having been in the rank and file for 35 years mostly as a promoter of the

"peer review" process. It is pay to play and has little credence. Not throwing the baby out with the bath water. Strongly suggesting that most have zero ability to navigate these treacherous seas.

There fore in general its more and more about less and less. Invert the pyramid. Develop some skills.